The Oobabooga Text Generation Web UI has released version 3.9, offering a locally hosted, customizable interface for working with large language models (LLMs). This application serves as a personal AI playground, enabling users to run their own ChatGPT-style setups without the concerns associated with cloud data storage. Built using Gradio, a Python library for creating web-based interfaces for machine learning, it provides a straightforward chat and text generation environment. Users have full control over prompts, model selection, and outputs, making it an excellent tool for developers, researchers, and hobbyists interested in experimenting with LLMs locally.

The interface supports various backends, including Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM through Docker. Users can load models, fine-tune them with LoRA, switch between chat modes, and access OpenAI-compatible APIs—all from a single interface. With built-in extension support, the platform enhances functionality, enabling multimodal features and streaming capabilities, all within a user-friendly web browser environment.

Key features include the ability to run LLMs locally without internet access, swap models seamlessly, fine-tune them, utilize OpenAI-compatible APIs, save chat histories, and adjust advanced generation settings for tailored outputs. This makes Oobabooga ideal for tasks such as testing custom models, building chatbots, writing stories, or automating content.

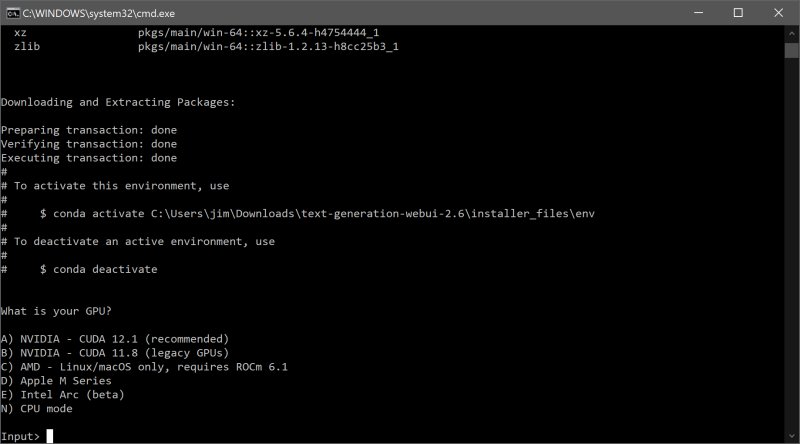

To install and run Oobabooga, users need to download the zip file, unzip it, and execute the appropriate start file for their operating system. After selecting the GPU vendor or opting for CPU use, the program will run, allowing users to access the interface by navigating to http://localhost:7860 in their browser. Users can then download and add AI models from sources like Hugging Face to the designated models folder to start generating texts and running models.

While the tool has significant advantages, such as being a fully local and private AI sandbox and supporting multiple backends and extensions, it may be resource-intensive depending on the models used and requires a manual setup that may present a learning curve for some users.

In summary, Oobabooga Text Generation Web UI is a powerful tool for anyone interested in LLM experimentation, offering a local, customizable environment for AI exploration. As AI technology continues to evolve, tools like Oobabooga will play a critical role in democratizing access to advanced language models, paving the way for innovations in content generation, automated responses, and interactive AI applications

The interface supports various backends, including Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM through Docker. Users can load models, fine-tune them with LoRA, switch between chat modes, and access OpenAI-compatible APIs—all from a single interface. With built-in extension support, the platform enhances functionality, enabling multimodal features and streaming capabilities, all within a user-friendly web browser environment.

Key features include the ability to run LLMs locally without internet access, swap models seamlessly, fine-tune them, utilize OpenAI-compatible APIs, save chat histories, and adjust advanced generation settings for tailored outputs. This makes Oobabooga ideal for tasks such as testing custom models, building chatbots, writing stories, or automating content.

To install and run Oobabooga, users need to download the zip file, unzip it, and execute the appropriate start file for their operating system. After selecting the GPU vendor or opting for CPU use, the program will run, allowing users to access the interface by navigating to http://localhost:7860 in their browser. Users can then download and add AI models from sources like Hugging Face to the designated models folder to start generating texts and running models.

While the tool has significant advantages, such as being a fully local and private AI sandbox and supporting multiple backends and extensions, it may be resource-intensive depending on the models used and requires a manual setup that may present a learning curve for some users.

In summary, Oobabooga Text Generation Web UI is a powerful tool for anyone interested in LLM experimentation, offering a local, customizable environment for AI exploration. As AI technology continues to evolve, tools like Oobabooga will play a critical role in democratizing access to advanced language models, paving the way for innovations in content generation, automated responses, and interactive AI applications

Oobabooga Text Generation Web UI 3.9 released

Oobabooga Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).