Ollama has released version 0.7.0 of its innovative local-first platform, designed to bring large language models (LLMs) directly to users' desktops. This allows for a completely offline experience without reliance on the cloud or account creation, prioritizing user privacy and control over data. With Ollama, developers and tech enthusiasts can easily run advanced models such as LLaMA 3.3, Phi-4, Mistral, and DeepSeek.

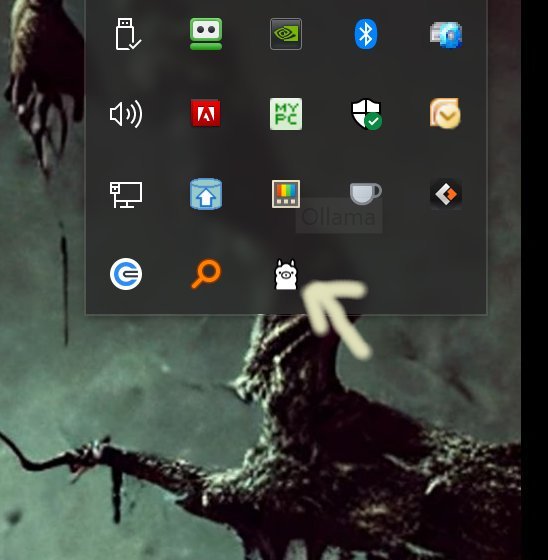

Installation is straightforward—users simply download and install Ollama, which runs quietly in the system tray. It is compatible with Windows, macOS, and Linux, making it versatile for various setups. The platform emphasizes command-line interface (CLI) functionality, allowing users to interact with models, create custom versions, and execute commands efficiently.

Ollama stands out for its local execution, ensuring faster responses without cloud calls and full data privacy. Users can effortlessly chat with models, swap them out, or create their own through simple commands and Modelfiles. The built-in support for Python and JavaScript also allows developers to integrate Ollama into their applications seamlessly.

While Ollama excels as a CLI tool, it does offer alternative interfaces, such as OLamma and Web UI, though they may lack some advanced command functionalities. Users can customize model behaviors, define system instructions, and script outputs or batch processes directly from the terminal. Comprehensive documentation is available, guiding users through command usage, model management, and customization options.

In summary, Ollama 0.7.0 is a powerful, efficient, and user-friendly tool for those comfortable with command-line operations. It offers unmatched local LLM capabilities while maintaining user privacy. However, it may not appeal to those who prefer graphical user interfaces. For anyone seeking a robust local AI solution, Ollama is poised to deliver impressive performance and flexibility.

Extension: As technology continues to evolve, the demand for privacy-focused AI solutions is increasing. Ollama's approach to local-first computing not only addresses these concerns but also empowers users to take control of their AI interactions fully. Future updates could include enhanced user interfaces to cater to a broader audience, potentially incorporating hybrid models that blend CLI efficiency with graphical elements. Additionally, as more sophisticated models are developed, Ollama could expand its library, allowing users to explore even more diverse applications of AI in their personal and professional lives

Installation is straightforward—users simply download and install Ollama, which runs quietly in the system tray. It is compatible with Windows, macOS, and Linux, making it versatile for various setups. The platform emphasizes command-line interface (CLI) functionality, allowing users to interact with models, create custom versions, and execute commands efficiently.

Ollama stands out for its local execution, ensuring faster responses without cloud calls and full data privacy. Users can effortlessly chat with models, swap them out, or create their own through simple commands and Modelfiles. The built-in support for Python and JavaScript also allows developers to integrate Ollama into their applications seamlessly.

While Ollama excels as a CLI tool, it does offer alternative interfaces, such as OLamma and Web UI, though they may lack some advanced command functionalities. Users can customize model behaviors, define system instructions, and script outputs or batch processes directly from the terminal. Comprehensive documentation is available, guiding users through command usage, model management, and customization options.

In summary, Ollama 0.7.0 is a powerful, efficient, and user-friendly tool for those comfortable with command-line operations. It offers unmatched local LLM capabilities while maintaining user privacy. However, it may not appeal to those who prefer graphical user interfaces. For anyone seeking a robust local AI solution, Ollama is poised to deliver impressive performance and flexibility.

Extension: As technology continues to evolve, the demand for privacy-focused AI solutions is increasing. Ollama's approach to local-first computing not only addresses these concerns but also empowers users to take control of their AI interactions fully. Future updates could include enhanced user interfaces to cater to a broader audience, potentially incorporating hybrid models that blend CLI efficiency with graphical elements. Additionally, as more sophisticated models are developed, Ollama could expand its library, allowing users to explore even more diverse applications of AI in their personal and professional lives

Ollama 0.7.0 released

Ollama is the local-first platform that brings large language models (LLMs) right to your desktop.