Ollama has recently released version 0.12.7 as a pre-release, following the stable version 0.12.6. This innovative platform allows users to run large language models (LLMs) directly on their desktops without relying on cloud services, ensuring complete privacy and control over data. Ollama supports a variety of top-tier models, including LLaMA 3.3, Phi-4, Mistral, and DeepSeek, and is designed for developers, enthusiasts, and privacy advocates who prefer a local-first approach to AI.

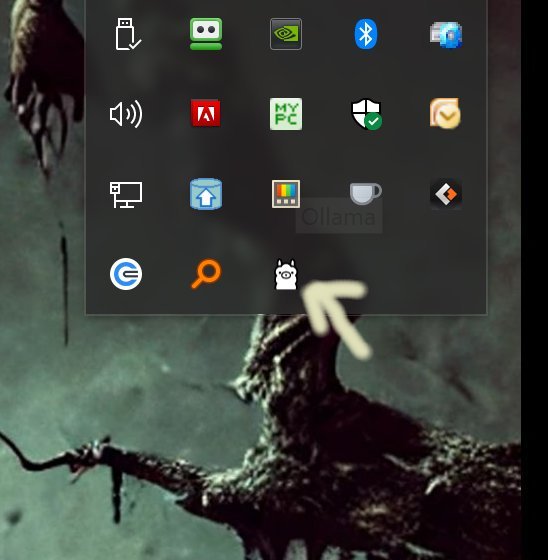

Installation of Ollama is straightforward; users simply download the application, and upon running it, they can access it through the system tray. The platform is compatible with Windows, macOS, and Linux, making it a versatile choice for different operating systems. Ollama's command-line interface (CLI) facilitates quick interactions and customization, allowing users to chat with models, swap between them, or even create custom models using simple commands or Modelfiles.

Key features of Ollama include:

- Local Execution: All processes occur on the user's device, eliminating the risks associated with cloud calls and data leaks.

- Cross-Platform Compatibility: Works seamlessly across major operating systems.

- Full CLI Power: Provides extensive command-line capabilities for advanced users who want to script their interactions.

- Modelfile Customization: Users can import different model formats and tweak prompts to create tailored AI assistants.

- Developer-Friendly: Built-in support for Python and JavaScript allows integration into various applications.

While Ollama is optimized for CLI use, users who prefer graphical interfaces can explore community-created options like OLamma and Web UI, though these may limit certain commands. The CLI enables comprehensive customization, including defining system instructions and managing model behaviors.

With a robust set of documentation available, users can easily navigate commands and setups. For example, commands like `ollama pull llama3` to download models or `ollama run llama3 --prompt "Explain quantum computing in simple terms"` for quick inquiries demonstrate Ollama's user-friendly approach to model management.

In conclusion, Ollama stands out as a fast, efficient, and entirely local solution for users seeking powerful language model capabilities without the complications of online dependencies. While its command-line focus may not appeal to everyone, it remains an excellent choice for those comfortable with text-based interfaces, offering raw AI power at their fingertips. Moving forward, Ollama may benefit from expanding its user interfaces to cater to a broader audience while maintaining its core focus on privacy and local execution

Installation of Ollama is straightforward; users simply download the application, and upon running it, they can access it through the system tray. The platform is compatible with Windows, macOS, and Linux, making it a versatile choice for different operating systems. Ollama's command-line interface (CLI) facilitates quick interactions and customization, allowing users to chat with models, swap between them, or even create custom models using simple commands or Modelfiles.

Key features of Ollama include:

- Local Execution: All processes occur on the user's device, eliminating the risks associated with cloud calls and data leaks.

- Cross-Platform Compatibility: Works seamlessly across major operating systems.

- Full CLI Power: Provides extensive command-line capabilities for advanced users who want to script their interactions.

- Modelfile Customization: Users can import different model formats and tweak prompts to create tailored AI assistants.

- Developer-Friendly: Built-in support for Python and JavaScript allows integration into various applications.

While Ollama is optimized for CLI use, users who prefer graphical interfaces can explore community-created options like OLamma and Web UI, though these may limit certain commands. The CLI enables comprehensive customization, including defining system instructions and managing model behaviors.

With a robust set of documentation available, users can easily navigate commands and setups. For example, commands like `ollama pull llama3` to download models or `ollama run llama3 --prompt "Explain quantum computing in simple terms"` for quick inquiries demonstrate Ollama's user-friendly approach to model management.

In conclusion, Ollama stands out as a fast, efficient, and entirely local solution for users seeking powerful language model capabilities without the complications of online dependencies. While its command-line focus may not appeal to everyone, it remains an excellent choice for those comfortable with text-based interfaces, offering raw AI power at their fingertips. Moving forward, Ollama may benefit from expanding its user interfaces to cater to a broader audience while maintaining its core focus on privacy and local execution

Ollama 0.12.7 Pre-Release / 0.12.6 released

Ollama is the local-first platform that brings large language models (LLMs) right to your desktop.