Ollama has released its pre-release version 0.10.0 RC2 and version 0.9.6, continuing to solidify its position as a local-first platform that allows users to run large language models (LLMs) directly on their desktops. By eliminating the need for cloud services, accounts, and internet connections, Ollama prioritizes privacy and user control, making it ideal for developers and privacy-conscious individuals alike.

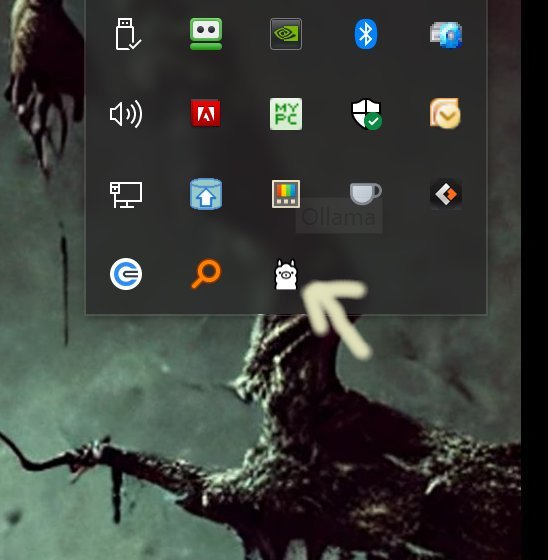

The platform supports various high-performance models, including LLaMA 3.3, Phi-4, and Mistral, and is easy to install. Users can simply download and run the application, which integrates seamlessly into their system, indicated by a LLama icon in the system tray. Ollama is designed for speed and efficiency, running entirely offline on Windows, macOS, and Linux systems, thus ensuring rapid responses and complete data security.

Ollama's command-line interface (CLI) is a standout feature, providing advanced users with robust control over their interactions with the models. Through the use of Modelfiles, users can define system instructions, customize prompts, and import models in various formats. This allows for the creation of specialized assistants tailored to specific needs or preferences.

While Ollama excels as a command-line tool, alternatives like OLamma and web UI options exist for those less comfortable with typing, albeit at the cost of some functionality. For users who prefer a graphical user interface (GUI), LM Studio offers a desktop option that supports web browsing. However, Ollama's CLI remains the most powerful way to leverage its capabilities.

The platform includes comprehensive documentation to assist users in navigating the CLI and mastering commands, with resources available for model downloading, running sessions, and managing installed models. Example commands demonstrate how to pull models, initiate chat sessions, and handle one-off prompts, emphasizing the flexibility and efficiency of the tool.

In summary, Ollama provides a fast, efficient, and entirely local AI experience, free from the limitations of cloud dependency and data privacy concerns. While its CLI may pose a challenge for non-technical users, those who are comfortable with terminal commands will find Ollama an invaluable resource for harnessing the power of large language models on their own devices.

Extension: Future Potential of Ollama

As Ollama continues to evolve, potential enhancements could include the development of a more user-friendly GUI for those not versed in command-line operations, potentially broadening its user base. Additionally, expanding the library of compatible models and increasing customization options could further enhance the platform's appeal. Incorporating community contributions and feedback could also lead to innovative features that cater to diverse user needs, making Ollama not just a tool for developers but a versatile platform for anyone interested in AI technology. Finally, strategic partnerships with educational institutions and businesses could pave the way for Ollama to be integrated into various applications, thus democratizing access to powerful AI tools

The platform supports various high-performance models, including LLaMA 3.3, Phi-4, and Mistral, and is easy to install. Users can simply download and run the application, which integrates seamlessly into their system, indicated by a LLama icon in the system tray. Ollama is designed for speed and efficiency, running entirely offline on Windows, macOS, and Linux systems, thus ensuring rapid responses and complete data security.

Ollama's command-line interface (CLI) is a standout feature, providing advanced users with robust control over their interactions with the models. Through the use of Modelfiles, users can define system instructions, customize prompts, and import models in various formats. This allows for the creation of specialized assistants tailored to specific needs or preferences.

While Ollama excels as a command-line tool, alternatives like OLamma and web UI options exist for those less comfortable with typing, albeit at the cost of some functionality. For users who prefer a graphical user interface (GUI), LM Studio offers a desktop option that supports web browsing. However, Ollama's CLI remains the most powerful way to leverage its capabilities.

The platform includes comprehensive documentation to assist users in navigating the CLI and mastering commands, with resources available for model downloading, running sessions, and managing installed models. Example commands demonstrate how to pull models, initiate chat sessions, and handle one-off prompts, emphasizing the flexibility and efficiency of the tool.

In summary, Ollama provides a fast, efficient, and entirely local AI experience, free from the limitations of cloud dependency and data privacy concerns. While its CLI may pose a challenge for non-technical users, those who are comfortable with terminal commands will find Ollama an invaluable resource for harnessing the power of large language models on their own devices.

Extension: Future Potential of Ollama

As Ollama continues to evolve, potential enhancements could include the development of a more user-friendly GUI for those not versed in command-line operations, potentially broadening its user base. Additionally, expanding the library of compatible models and increasing customization options could further enhance the platform's appeal. Incorporating community contributions and feedback could also lead to innovative features that cater to diverse user needs, making Ollama not just a tool for developers but a versatile platform for anyone interested in AI technology. Finally, strategic partnerships with educational institutions and businesses could pave the way for Ollama to be integrated into various applications, thus democratizing access to powerful AI tools

Ollama 0.10.0 Pre-Release RC2 / 0.9.6 released

Ollama is the local-first platform that brings large language models (LLMs) right to your desktop.