KoboldCPP is an innovative tool designed to run powerful AI models directly on your computer, eliminating the need for accounts, internet connectivity, subscriptions, or compromising privacy. This lightweight, open-source application allows users to engage with AI in a secure and personal manner, making it ideal for various applications such as private business projects, storytelling, game lore development, or AI experimentation—all without relying on the cloud.

What Is KoboldCPP?

KoboldCPP serves as a backend for the KoboldAI web interface, providing a user-friendly chat experience similar to ChatGPT, but entirely offline. Users simply download a GGUF-format model file, launch KoboldCPP, and begin interacting with the AI. The tool supports both CPU and GPU acceleration, accommodating a range of hardware configurations.

Understanding GGUF and Compatible Models

GGUF stands for GPT-Generated Unified Format, a new format tailored for local use. It is lightweight and efficient, especially for running quantized models (4-bit or 8-bit), which are easier to handle on mid-range computers. Popular open-source models found on platforms like Hugging Face are typically available in GGUF format. However, KoboldCPP does not support GPTQ, Safetensors, or proprietary models from OpenAI, so it's crucial to verify model compatibility before downloading.

Target Audience for KoboldCPP

This tool is perfect for individuals who want to explore AI without the drawbacks of cloud services. It caters to writers looking to co-create narratives, RPG developers seeking AI-driven NPCs, developers experimenting with local AI setups, and anyone desiring a private and offline ChatGPT-like experience. KoboldCPP is especially beneficial in low-connectivity environments or for users frustrated by cloud-based limitations, providing complete control over AI interactions and data.

Main Features of KoboldCPP

- Local LLMs: Supports GGUF/LLAMA-based models for offline use.

- Privacy: No internet or cloud server is required.

- Performance: Offers GPU acceleration for fast processing.

- Easy Setup: Minimal installation process—just unzip and launch.

- Compatibility: Works seamlessly with KoboldAI's web UI for enhanced interactivity.

- Customizable: Allows for adjustments in context size, memory use, and LoRA adapters.

Acquiring AI Models for KoboldCPP

Users must separately download AI models, as KoboldCPP does not come bundled with them. Platforms like Hugging Face are recommended for sourcing GGUF models, focusing on 4-bit or 8-bit versions for mid-range systems.

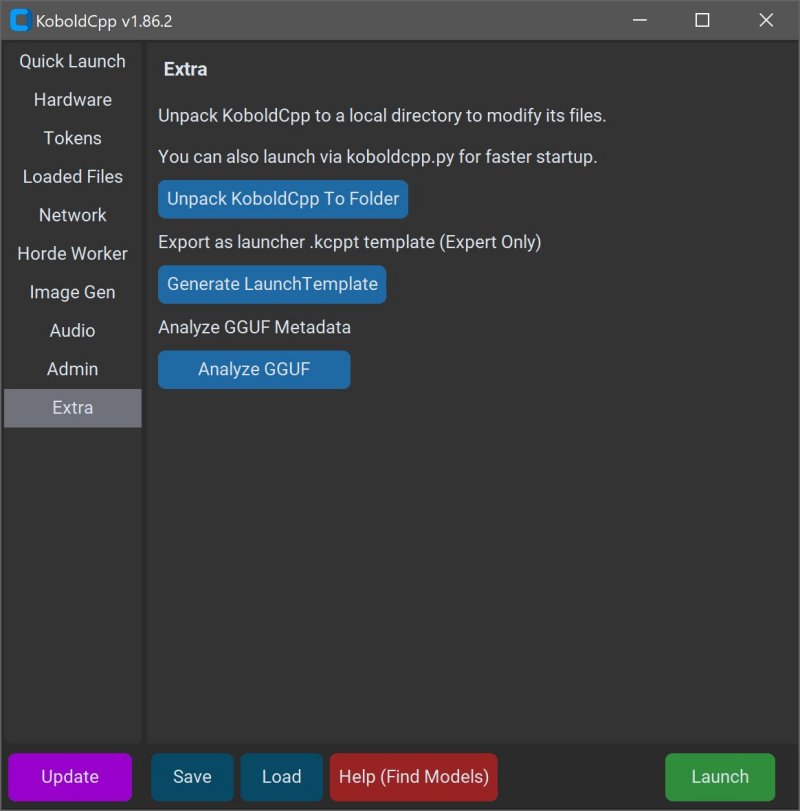

Using KoboldCPP: Step-by-Step Guide

1. Launch KoboldCPP.exe.

2. Select your downloaded .gguf model file.

3. Choose a backend (CPU, CUDA for NVIDIA, or oneAPI for Intel).

4. Adjust settings (context size, memory, etc.).

5. Click Start to open a local web chat in your browser.

6. Engage with the AI as you would in any other chatbot interface.

System Requirements

KoboldCPP requires moderate hardware capabilities:

- OS: Windows or Linux

- RAM: Minimum of 8GB, 16GB+ recommended

- CPU: Modern Intel or AMD with AVX2 support

- GPU: Optional NVIDIA or Intel GPU for enhanced performance

Things to Keep in Mind

While the initial setup may seem technical, the process becomes straightforward once familiarized. Users are encouraged to start with smaller models and progressively move to larger ones as their system allows. Different versions of KoboldCPP cater to varying hardware specifications, ensuring optimal performance based on user needs.

Conclusion

KoboldCPP provides a unique opportunity for users to harness AI technology without the constraints of cloud reliance, data privacy concerns, or subscription fees. This powerful, flexible tool is well-suited for writers, gamers, and anyone interested in a private AI assistant. With ongoing support from the open-source community, users can easily find guidance on model selection and customization. KoboldCPP represents an accessible and efficient way to bring AI capabilities directly to your desktop

What Is KoboldCPP?

KoboldCPP serves as a backend for the KoboldAI web interface, providing a user-friendly chat experience similar to ChatGPT, but entirely offline. Users simply download a GGUF-format model file, launch KoboldCPP, and begin interacting with the AI. The tool supports both CPU and GPU acceleration, accommodating a range of hardware configurations.

Understanding GGUF and Compatible Models

GGUF stands for GPT-Generated Unified Format, a new format tailored for local use. It is lightweight and efficient, especially for running quantized models (4-bit or 8-bit), which are easier to handle on mid-range computers. Popular open-source models found on platforms like Hugging Face are typically available in GGUF format. However, KoboldCPP does not support GPTQ, Safetensors, or proprietary models from OpenAI, so it's crucial to verify model compatibility before downloading.

Target Audience for KoboldCPP

This tool is perfect for individuals who want to explore AI without the drawbacks of cloud services. It caters to writers looking to co-create narratives, RPG developers seeking AI-driven NPCs, developers experimenting with local AI setups, and anyone desiring a private and offline ChatGPT-like experience. KoboldCPP is especially beneficial in low-connectivity environments or for users frustrated by cloud-based limitations, providing complete control over AI interactions and data.

Main Features of KoboldCPP

- Local LLMs: Supports GGUF/LLAMA-based models for offline use.

- Privacy: No internet or cloud server is required.

- Performance: Offers GPU acceleration for fast processing.

- Easy Setup: Minimal installation process—just unzip and launch.

- Compatibility: Works seamlessly with KoboldAI's web UI for enhanced interactivity.

- Customizable: Allows for adjustments in context size, memory use, and LoRA adapters.

Acquiring AI Models for KoboldCPP

Users must separately download AI models, as KoboldCPP does not come bundled with them. Platforms like Hugging Face are recommended for sourcing GGUF models, focusing on 4-bit or 8-bit versions for mid-range systems.

Using KoboldCPP: Step-by-Step Guide

1. Launch KoboldCPP.exe.

2. Select your downloaded .gguf model file.

3. Choose a backend (CPU, CUDA for NVIDIA, or oneAPI for Intel).

4. Adjust settings (context size, memory, etc.).

5. Click Start to open a local web chat in your browser.

6. Engage with the AI as you would in any other chatbot interface.

System Requirements

KoboldCPP requires moderate hardware capabilities:

- OS: Windows or Linux

- RAM: Minimum of 8GB, 16GB+ recommended

- CPU: Modern Intel or AMD with AVX2 support

- GPU: Optional NVIDIA or Intel GPU for enhanced performance

Things to Keep in Mind

While the initial setup may seem technical, the process becomes straightforward once familiarized. Users are encouraged to start with smaller models and progressively move to larger ones as their system allows. Different versions of KoboldCPP cater to varying hardware specifications, ensuring optimal performance based on user needs.

Conclusion

KoboldCPP provides a unique opportunity for users to harness AI technology without the constraints of cloud reliance, data privacy concerns, or subscription fees. This powerful, flexible tool is well-suited for writers, gamers, and anyone interested in a private AI assistant. With ongoing support from the open-source community, users can easily find guidance on model selection and customization. KoboldCPP represents an accessible and efficient way to bring AI capabilities directly to your desktop

KoboldCPP 1.97 released

KoboldCPP is a great choice for running powerful AI models on your computer with no accounts, internet, subscriptions, or privacy trade-offs.