KoboldCPP 1.90 has been released, presenting a powerful and private solution for running AI models directly on your computer without the need for accounts, internet access, subscriptions, or privacy compromises. This open-source tool allows users to engage with AI in a secure and personal manner, making it ideal for business projects, creative writing, game development, or simply experimenting with AI in a local environment.

What is KoboldCPP?

KoboldCPP is a lightweight application designed to run GGUF-format AI language models locally, functioning as a backend for the KoboldAI web interface. It provides a user-friendly chat interface similar to ChatGPT, enabling users to download a model file and start interacting immediately. It supports both CPU and GPU acceleration, accommodating various hardware configurations.

Understanding GGUF

GGUF, or GPT-Generated Unified Format, is a specialized format designed for local use, particularly efficient with quantized models. Most popular open-source models on platforms like Hugging Face are available in this format, while it does not support proprietary models or certain other formats. Users must ensure their chosen models are compatible with GGUF for optimal performance.

Target Audience

KoboldCPP caters to those who wish to utilize AI without cloud dependency, including writers, RPG developers, and tech enthusiasts looking for a private, offline alternative to AI chatbots. It is especially beneficial for users in areas with limited connectivity who seek to avoid data privacy concerns associated with cloud services.

Key Features

- Local LLMs: Operate GGUF/LLAMA models directly on your PC.

- Offline Mode: Complete privacy with no reliance on internet or cloud servers.

- Fast Performance: Utilizes Intel oneAPI and NVIDIA CUDA for enhanced speed.

- Simple Setup: Easy installation without complex coding.

- KoboldAI Compatible: Access popular features such as memory and character cards.

- Customizable: Adjust settings for a more personalized experience.

Acquiring AI Models

While KoboldCPP itself does not come with AI models, users can download them from trusted sources, primarily Hugging Face, ensuring they opt for 4-bit or 8-bit GGUF versions for compatibility with mid-range systems.

Usage Instructions

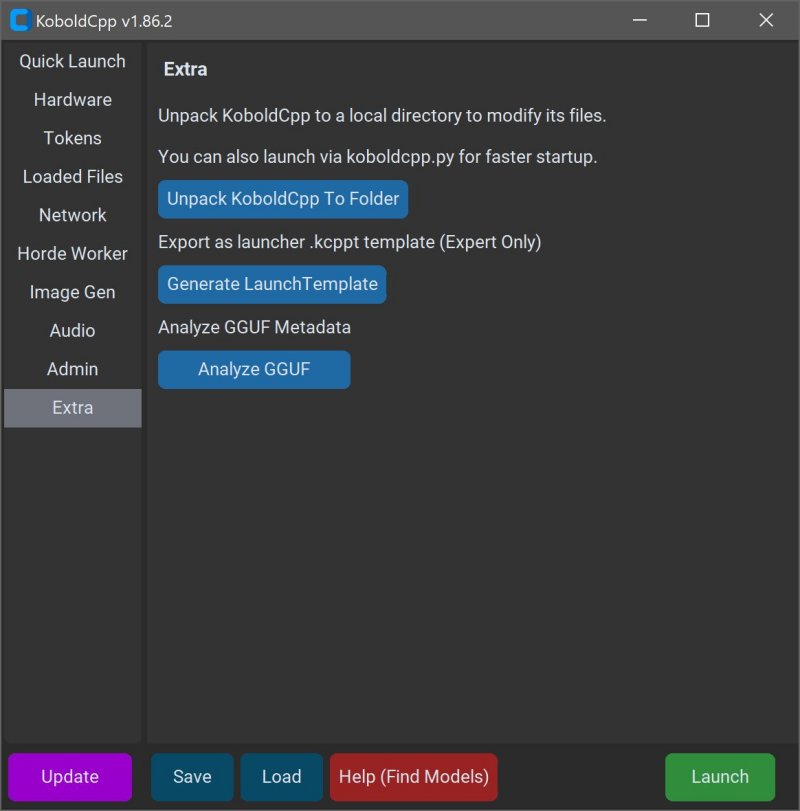

Once a model is downloaded, using KoboldCPP involves launching the application, selecting the model file, choosing the backend, and adjusting settings before starting the local web chat. Users can enhance their experience with additional features available within the interface.

System Requirements

KoboldCPP functions well on modern PCs with a minimum of 8GB RAM and a compatible CPU. GPU support is optional but recommended for improved performance, especially in text generation tasks.

Additional Considerations

First-time users may find the initial setup slightly technical, but the process is straightforward and quick once familiarized. Users should start with smaller models if their system is limited in RAM and storage space.

Conclusion

KoboldCPP offers an accessible and efficient way to harness AI technology privately, making it an excellent choice for writers, gamers, and anyone seeking to leverage AI capabilities without cloud reliance. Its strong community support and flexibility ensure that users can effectively customize their AI experience. Whether you need assistance selecting a model or configuring your setup, the KoboldCPP community is ready to help

What is KoboldCPP?

KoboldCPP is a lightweight application designed to run GGUF-format AI language models locally, functioning as a backend for the KoboldAI web interface. It provides a user-friendly chat interface similar to ChatGPT, enabling users to download a model file and start interacting immediately. It supports both CPU and GPU acceleration, accommodating various hardware configurations.

Understanding GGUF

GGUF, or GPT-Generated Unified Format, is a specialized format designed for local use, particularly efficient with quantized models. Most popular open-source models on platforms like Hugging Face are available in this format, while it does not support proprietary models or certain other formats. Users must ensure their chosen models are compatible with GGUF for optimal performance.

Target Audience

KoboldCPP caters to those who wish to utilize AI without cloud dependency, including writers, RPG developers, and tech enthusiasts looking for a private, offline alternative to AI chatbots. It is especially beneficial for users in areas with limited connectivity who seek to avoid data privacy concerns associated with cloud services.

Key Features

- Local LLMs: Operate GGUF/LLAMA models directly on your PC.

- Offline Mode: Complete privacy with no reliance on internet or cloud servers.

- Fast Performance: Utilizes Intel oneAPI and NVIDIA CUDA for enhanced speed.

- Simple Setup: Easy installation without complex coding.

- KoboldAI Compatible: Access popular features such as memory and character cards.

- Customizable: Adjust settings for a more personalized experience.

Acquiring AI Models

While KoboldCPP itself does not come with AI models, users can download them from trusted sources, primarily Hugging Face, ensuring they opt for 4-bit or 8-bit GGUF versions for compatibility with mid-range systems.

Usage Instructions

Once a model is downloaded, using KoboldCPP involves launching the application, selecting the model file, choosing the backend, and adjusting settings before starting the local web chat. Users can enhance their experience with additional features available within the interface.

System Requirements

KoboldCPP functions well on modern PCs with a minimum of 8GB RAM and a compatible CPU. GPU support is optional but recommended for improved performance, especially in text generation tasks.

Additional Considerations

First-time users may find the initial setup slightly technical, but the process is straightforward and quick once familiarized. Users should start with smaller models if their system is limited in RAM and storage space.

Conclusion

KoboldCPP offers an accessible and efficient way to harness AI technology privately, making it an excellent choice for writers, gamers, and anyone seeking to leverage AI capabilities without cloud reliance. Its strong community support and flexibility ensure that users can effectively customize their AI experience. Whether you need assistance selecting a model or configuring your setup, the KoboldCPP community is ready to help

KoboldCPP 1.90 released

KoboldCPP is a great choice for running powerful AI models on your computer with no accounts, internet, subscriptions, or privacy trade-offs.