KoboldCPP 1.88 has been released, offering users a powerful tool for running AI models directly on their computers without the need for internet access, accounts, or subscriptions. This open-source application is ideal for those who want to engage in private projects such as writing, game development, or experimenting with AI in a secure environment.

KoboldCPP serves as a lightweight backend for the KoboldAI web interface, allowing users to interact with AI models in a chat-like interface similar to ChatGPT, but all processes occur locally. It supports GGUF-format AI language models, which are optimized for local use and efficient performance on mid-range PCs. Users can easily launch the application, select a model, and begin chatting, with support for both CPU and GPU acceleration.

The GGUF format, which stands for GPT-Generated Unified Format, is specifically designed for local execution of AI models, facilitating faster and more efficient processing. While many models from Hugging Face are available in this format, KoboldCPP does not support proprietary models from OpenAI or certain other formats.

KoboldCPP is particularly beneficial for individuals who prefer not to share their data with cloud services, including writers, RPG creators, and developers. It provides a level of control and privacy that many users find appealing, particularly in environments with limited connectivity.

Key features of KoboldCPP include the ability to run large language models (LLMs) offline, fast performance with GPU acceleration, a simple setup process, compatibility with the KoboldAI web interface, and customization options for chat interactions.

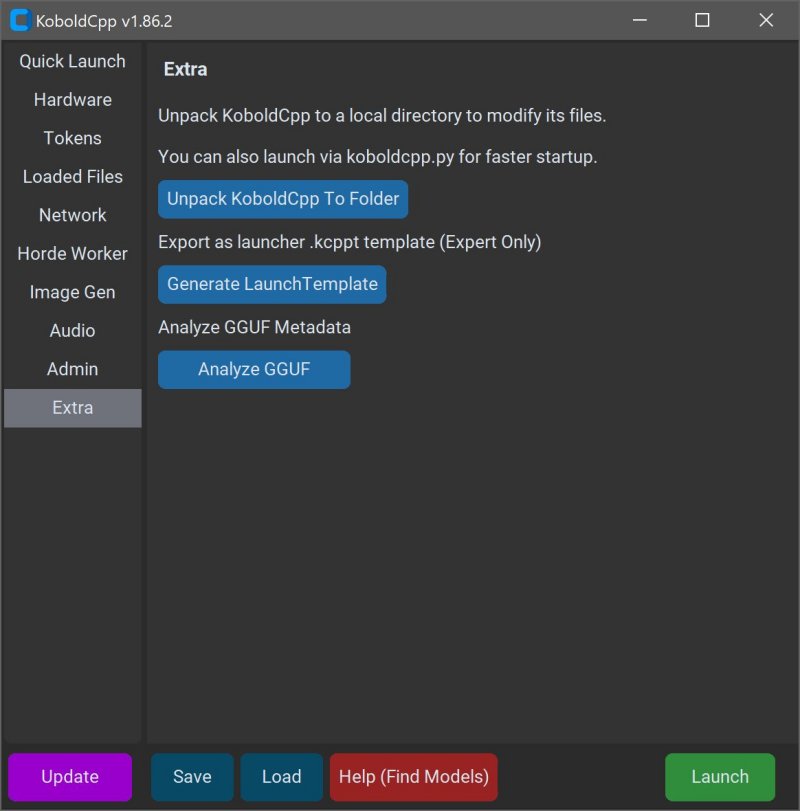

To utilize KoboldCPP, users must download compatible GGUF models separately, primarily from trusted sources like Hugging Face. The installation involves launching the application, selecting the desired model file, and adjusting settings before starting the chat interface.

System requirements are moderate, with a minimum of 8GB RAM recommended, and it supports both Windows and Linux operating systems. Various versions of KoboldCPP are available to accommodate different hardware configurations, ensuring optimal performance across a range of systems.

In conclusion, KoboldCPP empowers users with a private AI experience right on their desktops, free from the constraints of internet reliance and data privacy concerns. It stands out as an excellent choice for anyone looking to leverage AI for creative or developmental projects locally. If you're interested in setting up KoboldCPP or selecting your first model, the community is ready to assist you in navigating this powerful tool

KoboldCPP serves as a lightweight backend for the KoboldAI web interface, allowing users to interact with AI models in a chat-like interface similar to ChatGPT, but all processes occur locally. It supports GGUF-format AI language models, which are optimized for local use and efficient performance on mid-range PCs. Users can easily launch the application, select a model, and begin chatting, with support for both CPU and GPU acceleration.

The GGUF format, which stands for GPT-Generated Unified Format, is specifically designed for local execution of AI models, facilitating faster and more efficient processing. While many models from Hugging Face are available in this format, KoboldCPP does not support proprietary models from OpenAI or certain other formats.

KoboldCPP is particularly beneficial for individuals who prefer not to share their data with cloud services, including writers, RPG creators, and developers. It provides a level of control and privacy that many users find appealing, particularly in environments with limited connectivity.

Key features of KoboldCPP include the ability to run large language models (LLMs) offline, fast performance with GPU acceleration, a simple setup process, compatibility with the KoboldAI web interface, and customization options for chat interactions.

To utilize KoboldCPP, users must download compatible GGUF models separately, primarily from trusted sources like Hugging Face. The installation involves launching the application, selecting the desired model file, and adjusting settings before starting the chat interface.

System requirements are moderate, with a minimum of 8GB RAM recommended, and it supports both Windows and Linux operating systems. Various versions of KoboldCPP are available to accommodate different hardware configurations, ensuring optimal performance across a range of systems.

In conclusion, KoboldCPP empowers users with a private AI experience right on their desktops, free from the constraints of internet reliance and data privacy concerns. It stands out as an excellent choice for anyone looking to leverage AI for creative or developmental projects locally. If you're interested in setting up KoboldCPP or selecting your first model, the community is ready to assist you in navigating this powerful tool

KoboldCPP 1.88 released

KoboldCPP is a great choice for running powerful AI models on your computer with no accounts, internet, subscriptions, or privacy trade-offs.