The Oobabooga Text Generation Web UI has recently released version 3.7.1, which offers a locally hosted, customizable interface tailored for working with large language models (LLMs). This platform serves as a personal AI playground, allowing users to run a ChatGPT-like setup without needing to send data to the cloud, thus ensuring privacy and control.

Built on the Gradio framework, this web UI provides a user-friendly environment for chat and text generation, enabling users to manage prompts, select models, and produce outputs independently. It caters to developers, researchers, and hobbyists interested in experimenting with LLMs locally, supporting various backends such as Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM via Docker. Users can load models, fine-tune them using LoRA, switch between chat modes, and utilize OpenAI-compatible APIs, all from a single interface.

The tool's standout feature is the extensive control it provides, allowing users to run models without internet access and customize their experience fully. Users can easily run LLMs locally, swap models without restarting, fine-tune them, access multi-modal features, save chat histories, and adjust advanced generation settings to suit their projects.

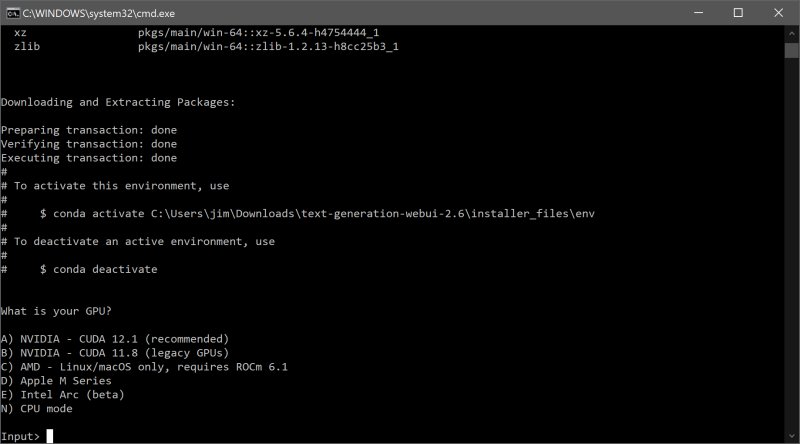

For installation, users need to ensure sufficient disk space, as the initial file is only 28MB but can expand to around 2GB with necessary downloads. After unzipping the file, users can initiate the program using the appropriate script for their operating system. Once set up, they can access the interface via a local web address and begin generating text or exploring AI models.

To run a model, users can download it from repositories like Hugging Face, either manually or through a built-in download script. Models can be placed in the designated folder within the application for easy access. Recommendations include Mistral 7B for users with ample resources and Tiny Llama for those with less powerful systems.

In summary, the Oobabooga Text Generation Web UI is an excellent tool for AI enthusiasts who want a private and customizable environment to experiment with LLMs. It allows for extensive flexibility in testing and developing AI models without the constraints of cloud services. The tool is ideal for anyone interested in building applications, writing, or simply exploring the capabilities of AI in a local setup.

Extension: Future updates could focus on enhancing user experience by simplifying the installation process, providing extensive documentation, and introducing pre-configured model packages for easier setup. Additionally, community features like forums or collaboration tools could be implemented to foster a supportive environment for users to share insights and model configurations. Enhancements in performance optimization and additional integrations with popular AI tools could further solidify Oobabooga’s position as a go-to platform for local AI experimentation

Built on the Gradio framework, this web UI provides a user-friendly environment for chat and text generation, enabling users to manage prompts, select models, and produce outputs independently. It caters to developers, researchers, and hobbyists interested in experimenting with LLMs locally, supporting various backends such as Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM via Docker. Users can load models, fine-tune them using LoRA, switch between chat modes, and utilize OpenAI-compatible APIs, all from a single interface.

The tool's standout feature is the extensive control it provides, allowing users to run models without internet access and customize their experience fully. Users can easily run LLMs locally, swap models without restarting, fine-tune them, access multi-modal features, save chat histories, and adjust advanced generation settings to suit their projects.

For installation, users need to ensure sufficient disk space, as the initial file is only 28MB but can expand to around 2GB with necessary downloads. After unzipping the file, users can initiate the program using the appropriate script for their operating system. Once set up, they can access the interface via a local web address and begin generating text or exploring AI models.

To run a model, users can download it from repositories like Hugging Face, either manually or through a built-in download script. Models can be placed in the designated folder within the application for easy access. Recommendations include Mistral 7B for users with ample resources and Tiny Llama for those with less powerful systems.

In summary, the Oobabooga Text Generation Web UI is an excellent tool for AI enthusiasts who want a private and customizable environment to experiment with LLMs. It allows for extensive flexibility in testing and developing AI models without the constraints of cloud services. The tool is ideal for anyone interested in building applications, writing, or simply exploring the capabilities of AI in a local setup.

Extension: Future updates could focus on enhancing user experience by simplifying the installation process, providing extensive documentation, and introducing pre-configured model packages for easier setup. Additionally, community features like forums or collaboration tools could be implemented to foster a supportive environment for users to share insights and model configurations. Enhancements in performance optimization and additional integrations with popular AI tools could further solidify Oobabooga’s position as a go-to platform for local AI experimentation

Oobabooga Text Generation Web UI 3.7.1 released

Oobabooga Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).

Oobabooga Text Generation Web UI 3.7.1 released @ MajorGeeks