The Oobabooga Text Generation Web UI has reached version 3.3, offering a customizable, locally hosted interface for interacting with large language models (LLMs). This platform allows users to create a ChatGPT-like experience while maintaining data privacy, as it does not require internet access or cloud services. Built with Gradio, the web UI facilitates a user-friendly environment for text generation and chat functionalities, appealing to developers, researchers, and hobbyists alike.

Key features include support for multiple backends such as Hugging Face Transformers and NVIDIA’s TensorRT-LLM, the ability to fine-tune models with LoRA, and seamless integration of OpenAI-compatible APIs. Users can easily switch between models, load extensions for added functionalities, and save chat histories, all within a clean browser interface.

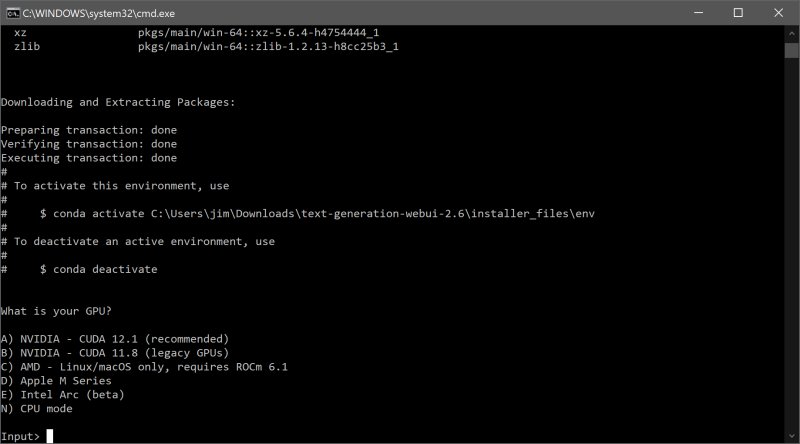

Installation requires some familiarity with Python and can take up to 2GB of space after setup. Users must download the appropriate model, with recommendations like Mistral 7B for powerful systems and Tiny Llama for less capable setups.

The Oobabooga Text Generation Web UI is praised for its local operation and extensive customization options but may present a learning curve during setup and resource demands depending on the model used. Overall, it serves as an excellent tool for anyone interested in exploring the capabilities of LLMs without the constraints of cloud dependency.

As the AI landscape continues to evolve, tools like Oobabooga provide a vital resource for experimentation and development. The emphasis on local processing not only enhances privacy but also allows for greater control over the AI models. This is particularly important in fields like research and content creation, where data sensitivity is paramount.

Moreover, the ability to fine-tune models and switch between them flexibly opens up new avenues for personalized applications, whether it's for developing chatbots or generating creative content. The built-in extension support could further enhance the user experience by enabling multimodal functionalities, which could be especially beneficial in areas like educational tools and interactive storytelling.

In a world increasingly reliant on AI, Oobabooga stands out as a platform that empowers users to take charge of their AI interactions. As the community around such tools grows, we can expect to see a surge in innovative applications and a deeper understanding of LLM capabilities, fostering a vibrant ecosystem for AI advancement. Whether you are a seasoned developer or a curious hobbyist, Oobabooga offers a playground to explore the vast potential of language models

Key features include support for multiple backends such as Hugging Face Transformers and NVIDIA’s TensorRT-LLM, the ability to fine-tune models with LoRA, and seamless integration of OpenAI-compatible APIs. Users can easily switch between models, load extensions for added functionalities, and save chat histories, all within a clean browser interface.

Installation requires some familiarity with Python and can take up to 2GB of space after setup. Users must download the appropriate model, with recommendations like Mistral 7B for powerful systems and Tiny Llama for less capable setups.

The Oobabooga Text Generation Web UI is praised for its local operation and extensive customization options but may present a learning curve during setup and resource demands depending on the model used. Overall, it serves as an excellent tool for anyone interested in exploring the capabilities of LLMs without the constraints of cloud dependency.

Extended Insights

As the AI landscape continues to evolve, tools like Oobabooga provide a vital resource for experimentation and development. The emphasis on local processing not only enhances privacy but also allows for greater control over the AI models. This is particularly important in fields like research and content creation, where data sensitivity is paramount.

Moreover, the ability to fine-tune models and switch between them flexibly opens up new avenues for personalized applications, whether it's for developing chatbots or generating creative content. The built-in extension support could further enhance the user experience by enabling multimodal functionalities, which could be especially beneficial in areas like educational tools and interactive storytelling.

In a world increasingly reliant on AI, Oobabooga stands out as a platform that empowers users to take charge of their AI interactions. As the community around such tools grows, we can expect to see a surge in innovative applications and a deeper understanding of LLM capabilities, fostering a vibrant ecosystem for AI advancement. Whether you are a seasoned developer or a curious hobbyist, Oobabooga offers a playground to explore the vast potential of language models

Oobabooga Text Generation Web UI 3.3 released

Oobabooga Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).