Oobabooga Text Generation Web UI 3.12 has been released, offering a locally hosted and customizable interface for interacting with large language models (LLMs). Designed for users interested in running their own AI setups without relying on cloud services, Oobabooga provides a user-friendly environment for text generation and chat functionalities. Built using Gradio, a Python library for creating web interfaces for machine learning, it allows users to control various aspects such as model selection and prompt input while maintaining data privacy.

The platform supports multiple backends, including Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM, all of which can be managed through Docker. Users can fine-tune models with LoRA, switch between chat modes, and access APIs compatible with OpenAI—all from one interface. With built-in extension support, Oobabooga enhances functionality through features like streaming and multimodal tasks, all conducted within a responsive browser environment.

Key features of Oobabooga include:

- Running LLMs locally without internet or OpenAI API reliance.

- The ability to swap models without needing to restart the application.

- Fine-tuning capabilities with LoRA.

- OpenAI-compatible APIs for chat or text completion.

- Loading multiple extensions for additional features.

- Saving chat histories for future reference.

- Customizing advanced generation settings for tailored outputs.

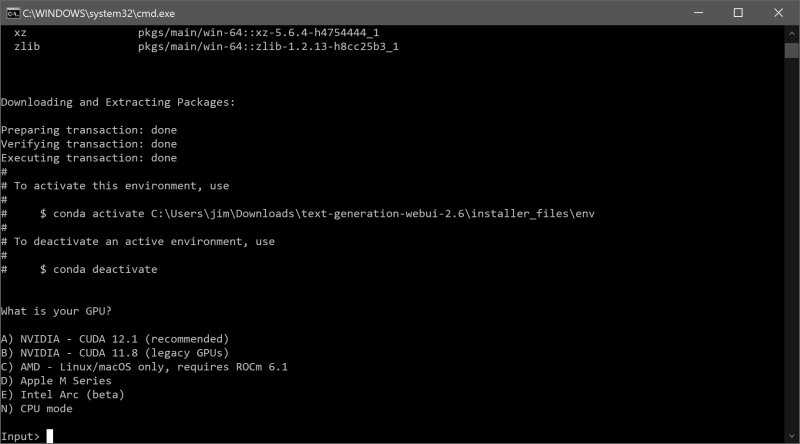

The installation process, while requiring some familiarity with Python and DMS, is straightforward. Users need to ensure sufficient disk space, download the necessary files, and execute the correct startup script for their operating system (Windows, macOS, or Linux). After selecting their GPU vendor, users can launch the application by navigating to the local web address.

To run a model, users can download compatible models from sources like Hugging Face and place them in the designated models folder within the Oobabooga directory. The application also recommends starting with models like Mistral 7B or Tiny Llama based on available system resources.

Pros of Oobabooga include its fully local operation, support for multiple backends, and seamless integration with OpenAI APIs. However, it can be resource-intensive, and the setup may present a learning curve for some users.

In summary, Oobabooga Text Generation Web UI is an ideal tool for AI enthusiasts, developers, and researchers looking to experiment with LLMs in a controlled and private environment. Its flexibility and comprehensive feature set make it a valuable addition to any AI toolkit, allowing users to delve into the capabilities of language models without cloud dependency

The platform supports multiple backends, including Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM, all of which can be managed through Docker. Users can fine-tune models with LoRA, switch between chat modes, and access APIs compatible with OpenAI—all from one interface. With built-in extension support, Oobabooga enhances functionality through features like streaming and multimodal tasks, all conducted within a responsive browser environment.

Key features of Oobabooga include:

- Running LLMs locally without internet or OpenAI API reliance.

- The ability to swap models without needing to restart the application.

- Fine-tuning capabilities with LoRA.

- OpenAI-compatible APIs for chat or text completion.

- Loading multiple extensions for additional features.

- Saving chat histories for future reference.

- Customizing advanced generation settings for tailored outputs.

The installation process, while requiring some familiarity with Python and DMS, is straightforward. Users need to ensure sufficient disk space, download the necessary files, and execute the correct startup script for their operating system (Windows, macOS, or Linux). After selecting their GPU vendor, users can launch the application by navigating to the local web address.

To run a model, users can download compatible models from sources like Hugging Face and place them in the designated models folder within the Oobabooga directory. The application also recommends starting with models like Mistral 7B or Tiny Llama based on available system resources.

Pros of Oobabooga include its fully local operation, support for multiple backends, and seamless integration with OpenAI APIs. However, it can be resource-intensive, and the setup may present a learning curve for some users.

In summary, Oobabooga Text Generation Web UI is an ideal tool for AI enthusiasts, developers, and researchers looking to experiment with LLMs in a controlled and private environment. Its flexibility and comprehensive feature set make it a valuable addition to any AI toolkit, allowing users to delve into the capabilities of language models without cloud dependency

Oobabooga Text Generation Web UI 3.12 released

Oobabooga Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).