The Oobabooga Text Generation Web UI has released version 2.7, providing a locally hosted, customizable interface for working with large language models (LLMs). This tool is perfect for those who want to experiment with AI without sending their data to the cloud. Built using Gradio, a Python library for creating web-based user interfaces, it offers a user-friendly environment for text generation and chatting.

Key features of the Oobabooga Text Generation Web UI include support for various backends, including Hugging Face Transformers and NVIDIA’s TensorRT-LLM, as well as the ability to load models, fine-tune them with LoRA, and switch between different chat modes. Users can also access OpenAI-compatible APIs, add extensions for enhanced functionality, and save chat histories for future use. Overall, it allows developers, researchers, and hobbyists to test and deploy AI models locally while maintaining privacy.

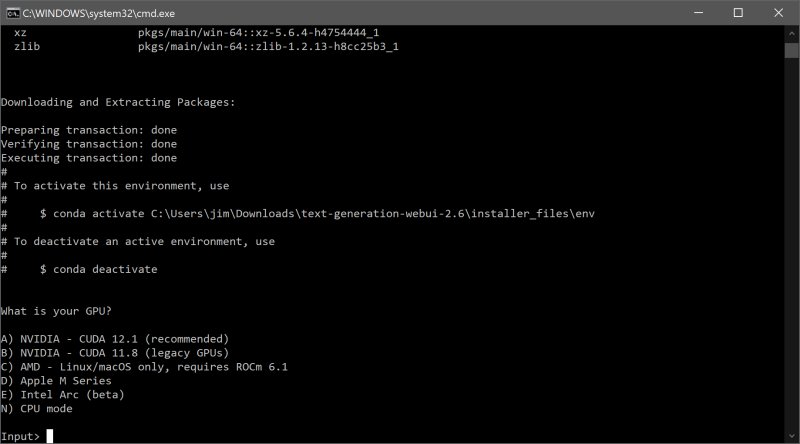

To install and run the Oobabooga Text Generation Web UI, users must ensure they have sufficient disk space, as the installation can be around 2GB. The setup process involves downloading the zip file, selecting the appropriate file for their operating system, and running it. After installation, users can easily download AI models from sources like Hugging Face and run them within the interface.

The tool presents several advantages, such as being fully local and private, supporting multiple backends, and allowing model swapping. However, it can be resource-intensive, and the manual setup may pose a challenge for some users.

In summary, Oobabooga Text Generation Web UI is a versatile and powerful tool for anyone interested in AI, providing a hands-on experience with LLMs while ensuring data privacy. With its customizable options and ease of use, it stands out as a valuable resource for AI enthusiasts looking to explore the capabilities of language models.

Extension:

The release of Oobabooga Text Generation Web UI 2.7 reflects a growing trend in AI development that prioritizes user control and data privacy. As individuals and organizations become more concerned about data security and the implications of cloud-based AI models, local interfaces like Oobabooga offer a compelling alternative.

Furthermore, the increasing support for various backends and models allows users to tailor their experience to specific needs, whether for academic research, content creation, or software development. As AI technology continues to evolve, it is likely that tools like Oobabooga will incorporate even more advanced features, such as improved model training capabilities and enhanced user interfaces.

Additionally, as the community around Oobabooga grows, users may benefit from shared resources, tutorials, and model recommendations, fostering a collaborative environment for learning and experimentation. This could lead to innovations in how we interact with AI and harness its potential across diverse fields.

In conclusion, Oobabooga Text Generation Web UI not only serves as a playground for AI enthusiasts but also represents a shift towards more decentralized and user-centric AI solutions, paving the way for future developments in the field

Key features of the Oobabooga Text Generation Web UI include support for various backends, including Hugging Face Transformers and NVIDIA’s TensorRT-LLM, as well as the ability to load models, fine-tune them with LoRA, and switch between different chat modes. Users can also access OpenAI-compatible APIs, add extensions for enhanced functionality, and save chat histories for future use. Overall, it allows developers, researchers, and hobbyists to test and deploy AI models locally while maintaining privacy.

To install and run the Oobabooga Text Generation Web UI, users must ensure they have sufficient disk space, as the installation can be around 2GB. The setup process involves downloading the zip file, selecting the appropriate file for their operating system, and running it. After installation, users can easily download AI models from sources like Hugging Face and run them within the interface.

The tool presents several advantages, such as being fully local and private, supporting multiple backends, and allowing model swapping. However, it can be resource-intensive, and the manual setup may pose a challenge for some users.

In summary, Oobabooga Text Generation Web UI is a versatile and powerful tool for anyone interested in AI, providing a hands-on experience with LLMs while ensuring data privacy. With its customizable options and ease of use, it stands out as a valuable resource for AI enthusiasts looking to explore the capabilities of language models.

Extension:

The release of Oobabooga Text Generation Web UI 2.7 reflects a growing trend in AI development that prioritizes user control and data privacy. As individuals and organizations become more concerned about data security and the implications of cloud-based AI models, local interfaces like Oobabooga offer a compelling alternative.

Furthermore, the increasing support for various backends and models allows users to tailor their experience to specific needs, whether for academic research, content creation, or software development. As AI technology continues to evolve, it is likely that tools like Oobabooga will incorporate even more advanced features, such as improved model training capabilities and enhanced user interfaces.

Additionally, as the community around Oobabooga grows, users may benefit from shared resources, tutorials, and model recommendations, fostering a collaborative environment for learning and experimentation. This could lead to innovations in how we interact with AI and harness its potential across diverse fields.

In conclusion, Oobabooga Text Generation Web UI not only serves as a playground for AI enthusiasts but also represents a shift towards more decentralized and user-centric AI solutions, paving the way for future developments in the field

Oobabooga Text Generation Web UI 2.7 released

Oobabooga Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).