Ollama 0.6.6 has been released, enhancing its position as a local-first platform that enables users to run large language models (LLMs) directly on their desktops without relying on cloud services. This means users can enjoy offline AI capabilities with no need for accounts or internet connectivity. Ollama supports high-performance models such as LLaMA 3.3, Phi-4, Mistral, and DeepSeek, making it an attractive choice for developers, hobbyists, and privacy-conscious individuals.

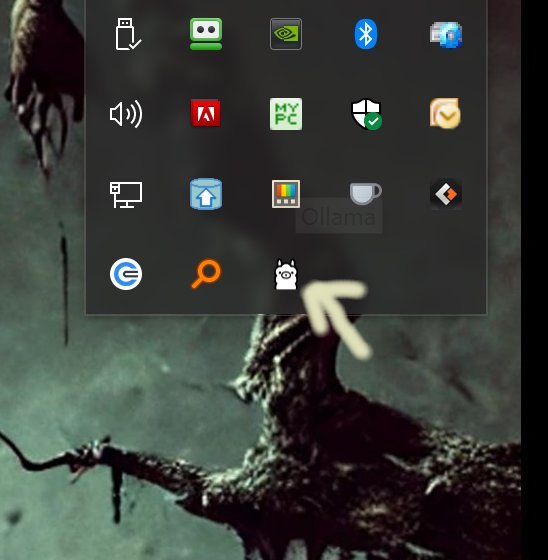

The installation process is straightforward—users can simply download and install Ollama, and once operational, it runs in the system tray. The platform is designed to be user-friendly for those familiar with command-line interfaces (CLI), allowing for quick setup and operation across various operating systems, including Windows, macOS, and Linux. Users can seamlessly chat with models, swap between them, or create custom models using simple commands or Modelfiles.

Ollama prioritizes local execution for enhanced data privacy, ensuring that all operations occur on the user's device, resulting in faster responses without the risks associated with cloud-based systems. Its CLI provides extensive customization options, enabling users to define system instructions, default prompts, and import models in various formats. Developers benefit from built-in support for Python, JavaScript, and REST API, making integration into applications straightforward.

While Ollama excels as a command-line tool, users who prefer graphical interfaces may find it less appealing, as it lacks a built-in GUI. Although alternatives like OLamma and LM Studio offer web browser support, they may not provide the same level of command control. However, Ollama boasts comprehensive documentation, assisting users in navigating the CLI efficiently and effectively.

In summary, Ollama is an efficient, powerful, and privacy-oriented platform ideal for users comfortable with command-line interactions. It offers a high degree of customization, making it suitable for both casual users and developers seeking to harness the capabilities of local LLMs without the complications of cloud dependencies. For those who prioritize control and performance in their AI tools, Ollama stands out as a leading choice

The installation process is straightforward—users can simply download and install Ollama, and once operational, it runs in the system tray. The platform is designed to be user-friendly for those familiar with command-line interfaces (CLI), allowing for quick setup and operation across various operating systems, including Windows, macOS, and Linux. Users can seamlessly chat with models, swap between them, or create custom models using simple commands or Modelfiles.

Ollama prioritizes local execution for enhanced data privacy, ensuring that all operations occur on the user's device, resulting in faster responses without the risks associated with cloud-based systems. Its CLI provides extensive customization options, enabling users to define system instructions, default prompts, and import models in various formats. Developers benefit from built-in support for Python, JavaScript, and REST API, making integration into applications straightforward.

While Ollama excels as a command-line tool, users who prefer graphical interfaces may find it less appealing, as it lacks a built-in GUI. Although alternatives like OLamma and LM Studio offer web browser support, they may not provide the same level of command control. However, Ollama boasts comprehensive documentation, assisting users in navigating the CLI efficiently and effectively.

In summary, Ollama is an efficient, powerful, and privacy-oriented platform ideal for users comfortable with command-line interactions. It offers a high degree of customization, making it suitable for both casual users and developers seeking to harness the capabilities of local LLMs without the complications of cloud dependencies. For those who prioritize control and performance in their AI tools, Ollama stands out as a leading choice

Ollama 0.6.6 released

Ollama is the local-first platform that brings large language models (LLMs) right to your desktop.