Ollama has released version 0.11.11, enhancing its position as a local-first platform that allows users to run large language models (LLMs) directly on their desktops, without the need for cloud services or accounts. This makes it appealing to developers, privacy advocates, and tech enthusiasts who wish to utilize advanced models like LLaMA 3.3, Phi-4, and Mistral offline.

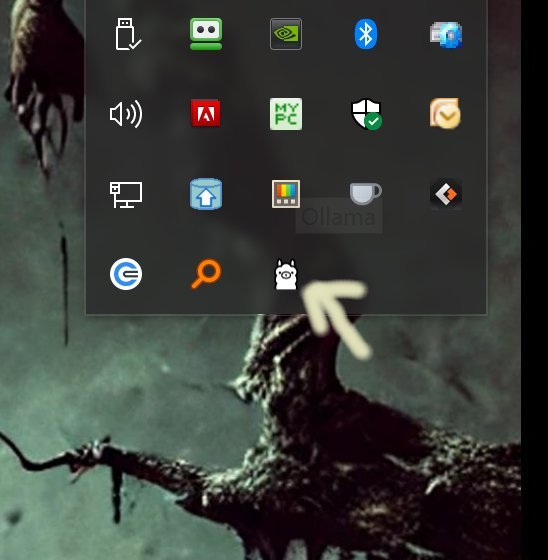

The installation process is straightforward, requiring users to download Ollama and run it, with a visible LLama icon in the system tray for easy access. The platform is designed for efficiency, enabling users to interact with LLMs quickly and privately across Windows, macOS, and Linux. Ollama’s command-line interface (CLI) facilitates smooth and scriptable interactions, allowing for the customization of models through Modelfiles, which can import various model formats and allow users to tweak prompts or create specialized assistants.

Developers will find Ollama particularly useful due to its support for Python and JavaScript libraries, making it easy to integrate into applications. The CLI provides extensive customization options, including the ability to set system instructions and manage multiple models seamlessly. Although Ollama is primarily designed for command-line use, other interfaces are available, albeit with some limitations in functionality.

For users who prefer graphical interfaces, alternatives like LM Studio offer full web browser GUI support, but may not provide the same level of control as the CLI. Ollama's documentation is comprehensive, covering everything from basic commands to advanced Modelfile setups, ensuring users can maximize their experience.

In summary, Ollama 0.11.11 is a robust tool for anyone looking to harness the power of LLMs locally. Its focus on privacy, speed, and flexibility makes it an excellent choice for those comfortable with command-line operations. However, users who favor graphical interfaces may find the lack of a built-in GUI a drawback. As Ollama continues to evolve, it promises to deliver even more features and enhancements for local LLM usage, catering to the growing demand for privacy-conscious AI solutions

The installation process is straightforward, requiring users to download Ollama and run it, with a visible LLama icon in the system tray for easy access. The platform is designed for efficiency, enabling users to interact with LLMs quickly and privately across Windows, macOS, and Linux. Ollama’s command-line interface (CLI) facilitates smooth and scriptable interactions, allowing for the customization of models through Modelfiles, which can import various model formats and allow users to tweak prompts or create specialized assistants.

Developers will find Ollama particularly useful due to its support for Python and JavaScript libraries, making it easy to integrate into applications. The CLI provides extensive customization options, including the ability to set system instructions and manage multiple models seamlessly. Although Ollama is primarily designed for command-line use, other interfaces are available, albeit with some limitations in functionality.

For users who prefer graphical interfaces, alternatives like LM Studio offer full web browser GUI support, but may not provide the same level of control as the CLI. Ollama's documentation is comprehensive, covering everything from basic commands to advanced Modelfile setups, ensuring users can maximize their experience.

In summary, Ollama 0.11.11 is a robust tool for anyone looking to harness the power of LLMs locally. Its focus on privacy, speed, and flexibility makes it an excellent choice for those comfortable with command-line operations. However, users who favor graphical interfaces may find the lack of a built-in GUI a drawback. As Ollama continues to evolve, it promises to deliver even more features and enhancements for local LLM usage, catering to the growing demand for privacy-conscious AI solutions

Ollama 0.11.11 released

Ollama is the local-first platform that brings large language models (LLMs) right to your desktop.